RoRD: Rotation-Robust Descriptors and Orthographic Views for Local Feature Matching

Udit Singh Parihar*1, Aniket Gujarathi*1, Kinal Mehta*1, Satyajit Tourani*1, Sourav Garg2, Michael Milford2 and K. Madhava Krishna1

* Denotes Equal Contribution

1 Robotics Research Center, IIIT Hyderabad.

2 QUT Centre for Robotics, Queensland University of Technology (QUT), Australia.

Paper | Code | Thesis Slides

Accepted to IROS 2021

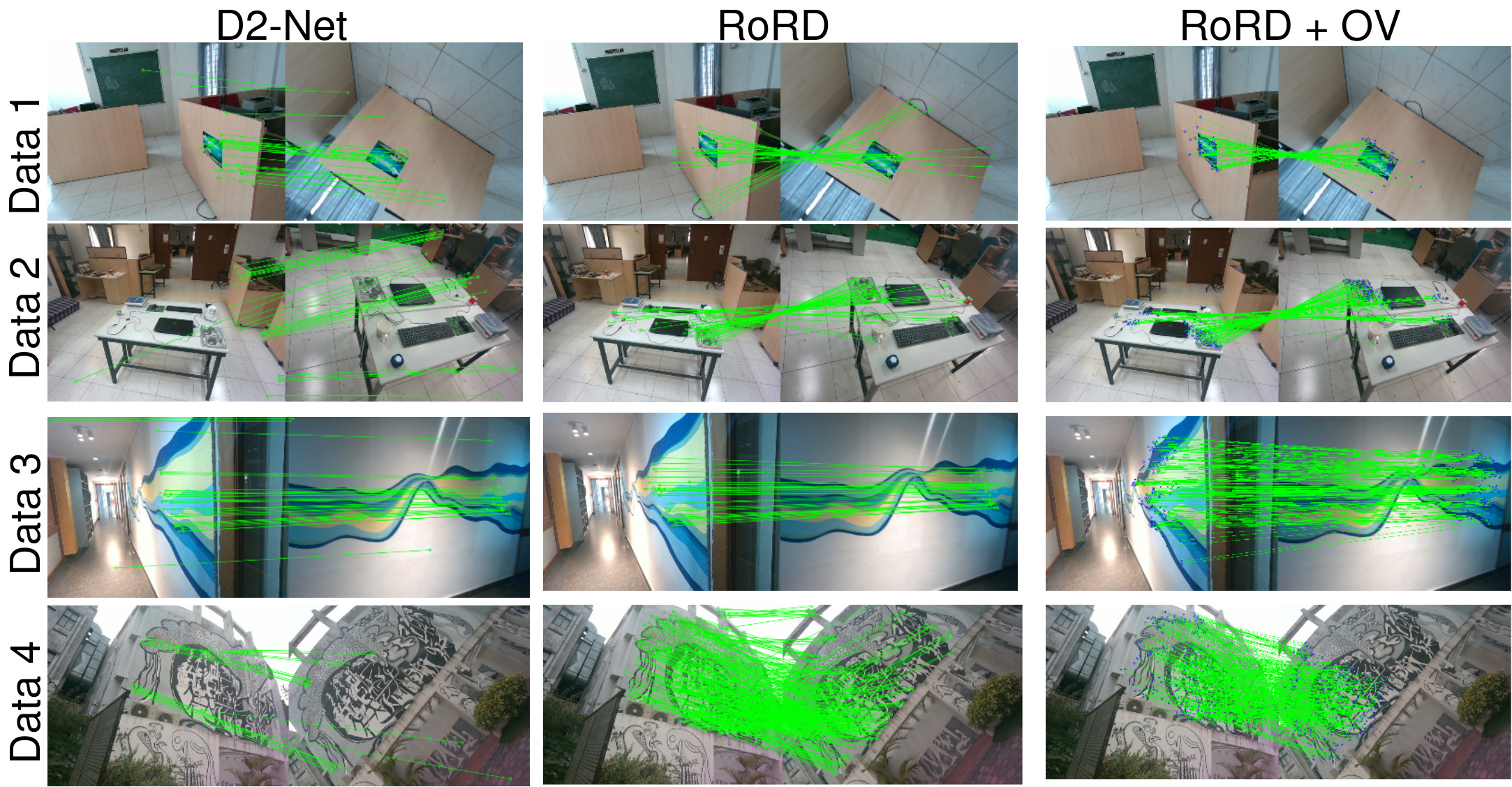

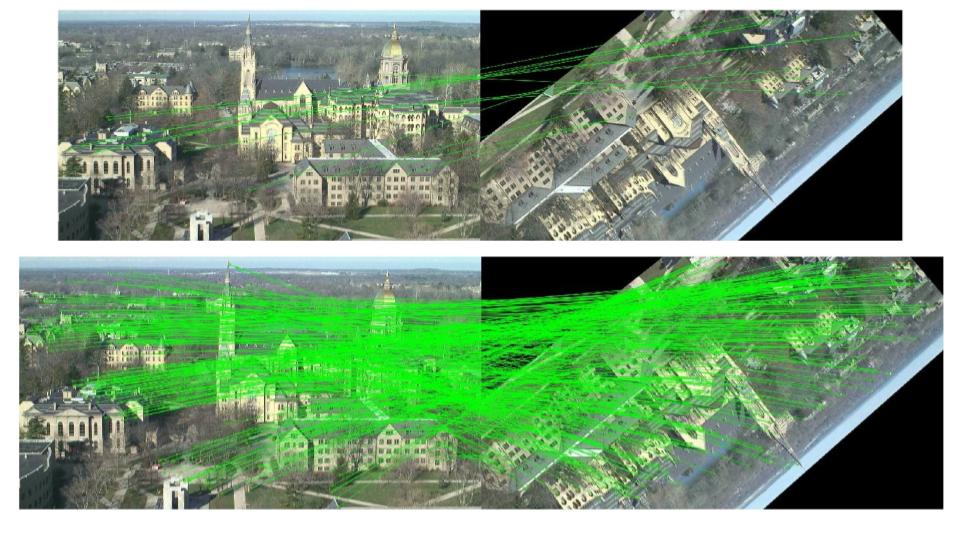

Local feature matches using RoRD. Our method RoRD finds precise local feature correspondences under extreme viewpoint (180 degrees) changes for both indoor and outdoor sequences.

Abstract

We present a novel framework that combines learning of invariant descriptors through data augmentation and orthographic viewpoint projection. We propose rotation-robust local descriptors, learnt through training data augmentation based on rotation homographies, and a correspondence ensemble technique that combines vanilla feature correspondences with those obtained through rotation-robust features. Using a range of benchmark datasets as well as contributing a new bespoke dataset for this research domain, we evaluate the effectiveness of the proposed approach on key tasks including pose estimation and visual place recognition.

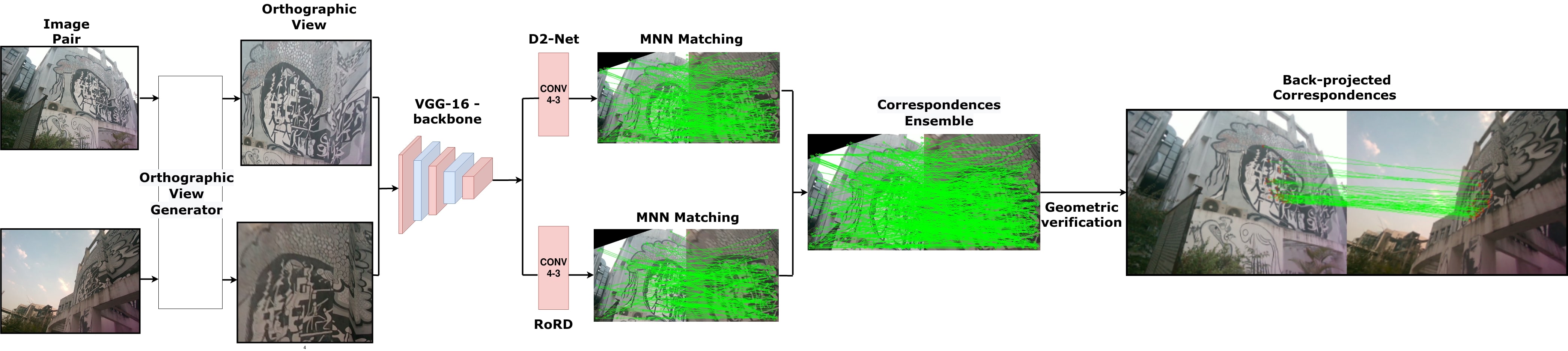

RoRD pipeline. Our approach takes a pair of perspective view images with significant viewpoint as input to the Orthographic View Generator, which aligns the camera to the plane-surface-normal to generate the top views. These top views are then passed to an ensemble of Vanilla D2-Net and RoRD techniques (ours). This approach creates precise feature correspondences that are robust to variations in viewpoints.

Dataset

The DiverseView dataset consists of 4 scenes of indoor and outdoor locations, with images captured at high viewpoint changes and camera rotations (up to 180 degrees). For the data collection, we have used the Intel RealSense D455 camera with RGB, Depth, and IMU sensors.

The three sequences of this dataset are as follows:

- Sequence 1 consists of 1138 images of an office-desk scene captured by moving around it in a semi-circular path, thus exhibiting extreme rotation variations.

- Sequence 2 consists of 2534 images obtained from a 360 degrees traversal about a table-top.

- Sequence 3 consists of 1519 images facing a wall with high viewpoint changes but limited camera rotations.

- Sequence 4 consists of 3931 images captured around a building with graffiti-art with varied camera viewpoints and rotations in low-lighting dusk conditions.

Results

DiverseView Dataset

Qualitative results from the DiverseView dataset. Each row corresponds to a different sequence from the dataset. RoRD leverages its learnt rotation-robust features to obtain precise feature correspondences, outperforming D2-net. Incorporating orthographic views with RoRD, (RoRD + OV) further improves performance, and outperforms both D2-Net and RoRD for all the four sequences.

HPatches Dataset

| Model | Standard | Rotated | Average |

|---|---|---|---|

| SIFT | 0.52/0.54/0.54 | 0.51/0.51/0.52 | 0.52/0.53/0.53 |

| SuperPoint | 0.69/0.71/0.73 | 0.21/0.22/0.22 | 0.45/0.46/0.48 |

| D2-Net | 0.73/0.81/0.84 | 0.17/0.20/0.22 | 0.45/0.50/0.53 |

| (Ours) RoRD | 0.68/0.75/0.78 | 0.46/0.57/0.62 | 0.57/0.66/0.70 |

| (Ours) RoRD Comb. | 0.71/0.78/0.81 | 0.44/0.54/0.59 | 0.58/0.66/0.70 |

| (Ours) RoRD + CE | 0.79/0.84/0.86 | 0.48/0.59/0.64 | 0.64/0.72/0.75 |

Quantitative results for MMA on the HPatches dataset using pixel thresholds 6/8/10. We highlight First, Second and Third best MMA values. We also provide averages over the results obtained by the Standard and Rotated HPatches dataset. Our Ensemble method ,RoRD + CE, outperforms all other methods with RoRD and SIFT being the second and the third best performer for the averaged HPatches datasets, respectively. However on the Rotated HPatches SIFT is able to outperforms other methods, with RORD Ensemble being the close second.

Footnote:

In the IEEE’s published version of this paper (DOI: 10.1109/IROS51168.2021.9636619), SIFT’s results in the Rotated and Average columns of MMA table are incorrect, which we have now corrected in the latest arXiv version and over GitHub: https://github.com/UditSinghParihar/RoRD. This change only affects the performance ranking of MMA evaluation on the HPatches dataset, where our proposed method still ranks the best when considering standard and average settings. Other results in the paper which benchmark against SIFT, that is, Pose Estimation and Visual Place Recognition, are not affected.

BibTex

If you use this code in your project, please cite the following paper:

```bibtex @inproceedings{parihar2021rord, title={RoRD: Rotation-Robust Descriptors and Orthographic Views for Local Feature Matching}, author={Parihar, Udit Singh and Gujarathi, Aniket and Mehta, Kinal and Tourani, Satyajit and Garg, Sourav and Milford, Michael and Krishna, K Madhava}, booktitle={2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, pages={1593–1600}, organization={IEEE} }